Introduction: Agentic AI (Artificial Intelligence) refers to systems capable of independent decision-making and actions, designed to emulate human-like cognitive functions such as reasoning, learning, and decision-making. This transformative technology holds the potential to revolutionize various sectors, from healthcare to finance, education, and beyond. However, with the rapid advancements in autonomous AI, significant ethical challenges have emerged. These challenges involve questions of responsibility, autonomy, and control, which are crucial as AI systems begin to operate in complex and often unpredictable environments.

In this article, we will explore the ethical concerns surrounding agentic AI, focusing on the key areas of responsibility, autonomy, and control. We will delve into the implications of these issues, discuss how they affect society, and examine potential solutions to ensure that AI systems align with human values and ethics.

What is Agentic AI?

Before we delve into the ethical challenges, it’s essential to define what agentic AI is and how it differs from traditional AI systems. Agentic AI is a type of AI that operates autonomously, making decisions without direct human input. These systems are designed to learn, adapt, and perform tasks that usually require human intelligence, such as planning, problem-solving, and decision-making. Unlike traditional AI, which is typically rule-based and operates within predefined constraints, agentic AI systems can operate independently, evaluate situations, and take actions based on real-time data and experience.

For example, autonomous vehicles, predictive healthcare systems, and smart financial advisors are all examples of agentic AI that make decisions and perform actions based on their programming and learning from the environment.

Ethical Challenge #1: Responsibility

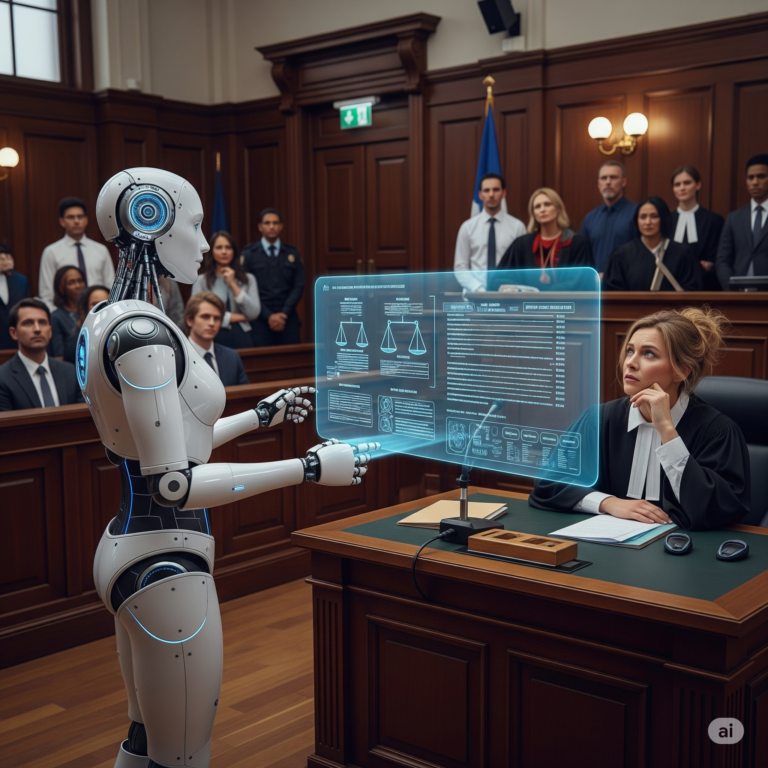

One of the most pressing ethical issues surrounding agentic AI is determining who is responsible when things go wrong. In traditional systems, the responsibility for errors or failures typically lies with the developers, designers, or operators of the system. However, when AI systems operate autonomously and make decisions independently, the question arises: who is accountable for the actions of the AI?

- Legal and Moral Responsibility: If an AI makes a decision that results in harm—whether in a medical diagnosis, financial transaction, or even a car accident—who is to blame? Should the creators of the AI, the operators, or the AI system itself be held responsible? Currently, legal frameworks around AI liability are not fully developed, creating ambiguity about accountability.

- Designing Accountability Mechanisms: To address this, AI systems must be designed with mechanisms that ensure accountability. This may include keeping detailed logs of decision-making processes and creating protocols for human oversight in critical situations. However, the more autonomous the AI becomes, the more complex these mechanisms will need to be.

Ethical Challenge #2: Autonomy

Another significant challenge with agentic AI is its autonomy. As AI systems become more capable of independent decision-making, the issue of how much autonomy should be granted to these systems arises.

- Loss of Human Control: One concern is that granting AI systems too much autonomy could result in a loss of human control. For example, autonomous military drones or decision-making in healthcare could raise concerns about humans being unable to intervene or override AI decisions in critical situations. How much autonomy is appropriate, and where should the line be drawn?

- The Risk of AI Acting in Unexpected Ways: The more autonomous an AI system is, the more likely it is to operate in ways that were not anticipated by its creators. While AI is designed to make decisions based on data, the complexity of the systems it learns from can sometimes lead to unpredictable behavior. This could result in unintended consequences or ethical dilemmas that were not foreseen during the development process.

- Ensuring Ethical AI Autonomy: Ensuring that AI systems act in ethically responsible ways is paramount. This can be achieved by programming AI with ethical decision-making frameworks and ensuring continuous human oversight. In certain situations, a “kill switch” or a way for humans to regain control should be implemented to prevent harmful outcomes.

Ethical Challenge #3: Control

Control over AI systems is closely related to both responsibility and autonomy. As AI becomes more autonomous, ensuring proper control mechanisms is essential to mitigate risks and prevent unintended consequences.

- The Power of Autonomous Systems: In areas like healthcare, finance, or law enforcement, autonomous AI systems could wield significant power over individuals’ lives. Who should have control over these systems, and how should that control be exercised? While AI can provide efficiency and speed, ensuring that it does not become too powerful or unaccountable is crucial.

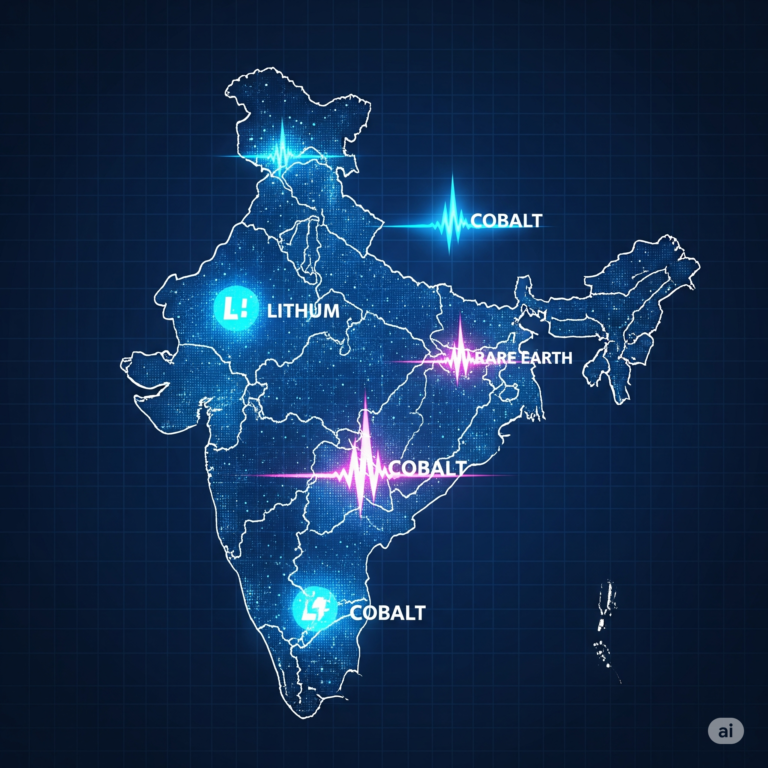

- Bias and Fairness in Control: The issue of control is further complicated by the potential for bias in AI decision-making. AI systems are trained on data, and if that data is biased, it can lead to unfair or discriminatory outcomes. For example, biased healthcare algorithms might recommend inappropriate treatments based on flawed data. Proper controls need to be in place to identify and eliminate biases in the training data and ensure fairness.

- Governance and Regulation: Governments and organizations must establish clear governance frameworks for controlling the use of agentic AI. This includes creating regulations for AI development, ensuring transparency in decision-making, and instituting checks and balances to prevent misuse of AI technologies.

Potential Solutions to Ethical Challenges

Addressing the ethical challenges of agentic AI requires a multi-faceted approach that includes technological, regulatory, and societal measures. Here are some potential solutions:

- Clear Accountability Frameworks: Legal and regulatory bodies must develop clear frameworks to define liability in cases involving autonomous AI systems. This should include rules for assigning responsibility based on the level of autonomy and human involvement in decision-making.

- Transparent AI Design: Developers should design AI systems with transparency in mind. This means ensuring that decision-making processes are explainable, traceable, and understandable to humans. When an AI system makes a decision, it should be able to explain how and why it made that choice.

- Human-in-the-Loop (HITL) Approaches: For critical decisions, such as those in healthcare or law enforcement, maintaining a human-in-the-loop approach can ensure that human judgment is involved in decision-making. This helps maintain oversight and control over autonomous systems.

- Ethical AI Standards: Industry-wide standards and ethical guidelines should be established for AI development. These standards should focus on ensuring fairness, transparency, and accountability in AI systems.

- AI Monitoring and Regulation: Governments and regulatory bodies must implement continuous monitoring of AI systems to ensure they remain aligned with societal values and ethical standards. This could include creating regulatory bodies that oversee the development and deployment of AI technologies.

FAQs on Ethical Challenges of Agentic AI

- What is agentic AI?

- Agentic AI refers to artificial intelligence systems capable of making decisions and taking actions autonomously, without human input.

- Why is responsibility a major ethical issue in agentic AI?

- Responsibility is a key issue because it is often unclear who should be held accountable when AI systems make decisions that lead to harm.

- Can agentic AI make ethical decisions?

- Agentic AI can make ethical decisions if it is programmed with ethical decision-making frameworks, but there is still uncertainty around the reliability of these systems.

- What is the role of autonomy in agentic AI?

- Autonomy in agentic AI refers to its ability to make independent decisions, which raises concerns about the loss of human control.

- How can AI bias be controlled?

- Bias can be controlled by using diverse, representative data sets and implementing fairness algorithms to ensure that decisions made by AI systems are equitable.

- What is the role of control in agentic AI?

- Control in agentic AI involves ensuring that humans can intervene in critical situations, and that AI systems are not allowed to operate unchecked.

- Can agentic AI be regulated?

- Yes, agentic AI can be regulated through policies and frameworks designed to ensure its ethical use, such as accountability measures and oversight.

- Who is responsible for the actions of agentic AI?

- Responsibility is often debated, but typically, the developers, operators, or organizations that deploy the AI system are held accountable.

- How do AI systems make ethical decisions?

- AI systems make ethical decisions based on pre-programmed ethical guidelines, machine learning models, and algorithms that weigh the consequences of different actions.

- Should there be a global standard for agentic AI ethics?

- A global standard for agentic AI ethics would help ensure consistency and fairness in how AI is developed and deployed across different countries and industries.

- How can we prevent agentic AI from making harmful decisions?

- By implementing transparency, fairness, and human oversight in AI systems, harmful decisions can be minimized.

- Is there a way to stop AI if it acts dangerously?

- Yes, AI systems can be equipped with a “kill switch” or emergency stop mechanism that allows human operators to intervene if necessary.

- How do ethical AI systems differ from regular AI?

- Ethical AI systems are designed with moral guidelines to make decisions that align with human values, while regular AI systems are focused purely on efficiency and performance.

- Can agentic AI be used in military applications?

- While agentic AI can be used in military applications, its ethical implications are significant, and there is ongoing debate about its role in warfare.

- How can developers ensure ethical AI design?

- Developers can ensure ethical AI design by incorporating ethical guidelines, bias mitigation, transparency, and regular audits into the development process.

Conclusion

The ethical challenges of agentic AI—particularly around responsibility, autonomy, and control—are crucial issues that need to be addressed as AI technology continues to evolve. As AI systems become more autonomous, the need for clear accountability mechanisms, ethical decision-making frameworks, and robust governance becomes even more important. By ensuring transparency, fairness, and human oversight, we can ensure that agentic AI serves humanity in a way that aligns with our values and priorities.

+ There are no comments

Add yours