Key Highlights:

- Emergency “Code Red” Response: OpenAI CEO Sam Altman declared an internal emergency on December 10, 2025, halting non-core initiatives and accelerating GPT-5.2’s release by weeks in direct response to Google’s Gemini 3 surpassing ChatGPT on the influential LM Arena leaderboard.

- Market Dominance Collapse: Anthropic’s Claude seized 32% of enterprise LLM market share (mid-2025), dethroning OpenAI’s 25%—a stunning reversal from OpenAI’s 50% dominance just one year ago, while Gemini 3 topped all major AI benchmarks.

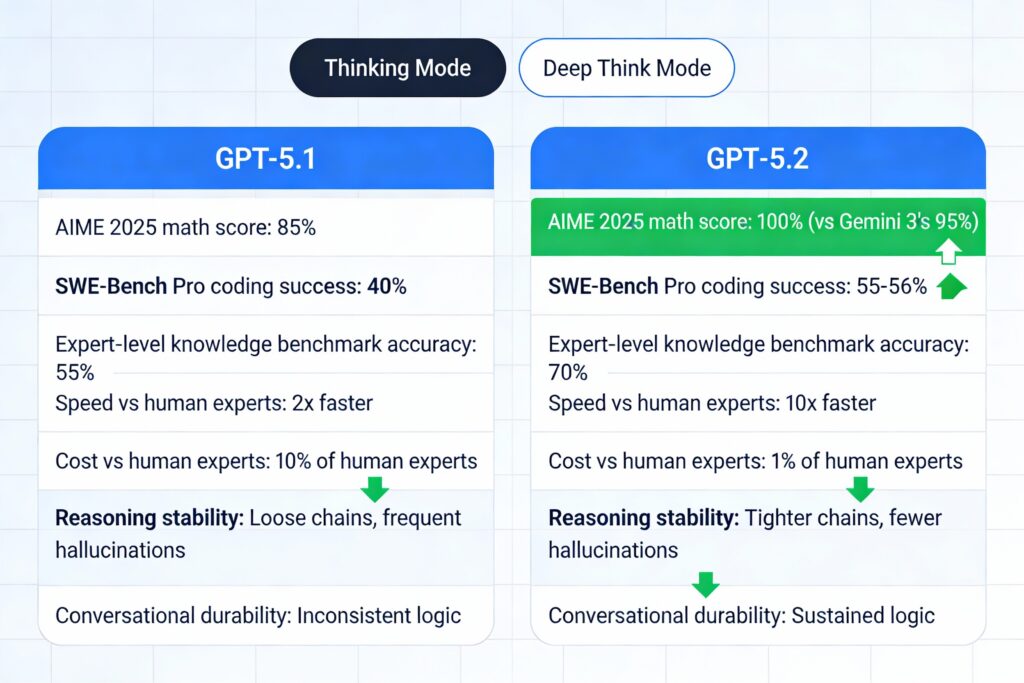

- GPT-5.2 Technical Breakthrough: Delivers 100% score on AIME 2025 mathematics (vs Gemini 3’s 95%), 55-56% on rigorous software engineering benchmarks, 10x faster than human experts at 1% cost, with dramatic hallucination reduction and reasoning stability improvements.

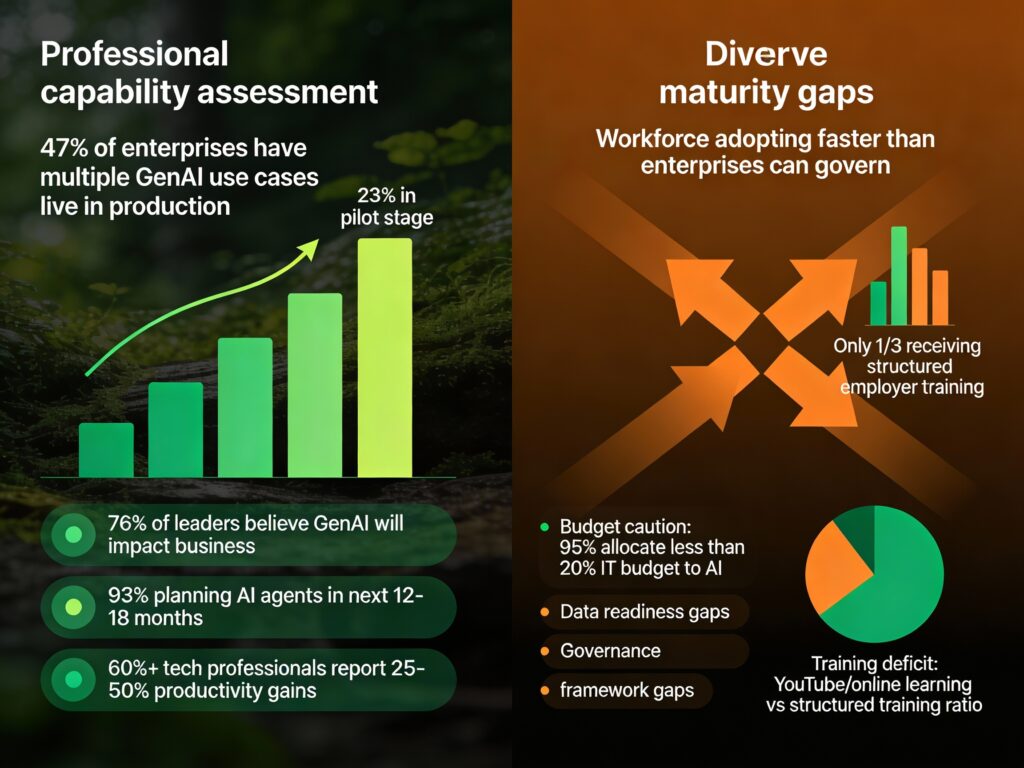

- India’s Adoption-Maturity Gap: 47% of Indian enterprises have multiple GenAI use cases in production (23% in pilots), yet only 1 in 3 receive structured training—workforce adoption outpacing organizational governance capability by dangerous margins

The December 2025 Shock—How Gemini 3 Changed Everything

When an Empire Cracks

For years, OpenAI owned the AI narrative. ChatGPT became synonymous with generative AI itself. Sam Altman became the public face of the AI revolution. OpenAI’s valuations soared to $80+ billion.

Then, in November 2025, Google’s Gemini 3 topped the LM Arena leaderboard—the most influential ranking system for AI model quality based on human preference voting. aicerts

Not by a little. The data showed:

- Gemini 3 Pro: 1501 Elo rating

- Grok 4.1: 1478 Elo

- Claude Opus 4.5: 1470 Elo

- GPT-5.1 (OpenAI’s then-flagship): 1457 Elo

Gemini 3 wasn’t just ahead—it was leading across text, vision, coding, math, and long-context reasoning tasks. Google’s AI surpassed OpenAI’s across nearly every major benchmark.

The leaderboard matters. It’s not just academic prestige—it directly influences which models enterprises choose, developers adopt, and researchers build upon. It’s the score that determines market share.

The Enterprise Betrayal That Hurt Worse

But the leaderboard loss was almost secondary to a more painful development: Anthropic had eaten OpenAI’s enterprise lunch while everyone was distracted.

According to Menlo Ventures’ comprehensive LLM Market Update (August 2025):

- Anthropic: 32% of enterprise market share

- OpenAI: 25%

- Google: Growing rapidly

This wasn’t a typo. OpenAI, which commanded 50% enterprise market share just 12 months earlier, had been cut in half and overtaken by a smaller rival.

Why did Anthropic win?

Enterprises discovered that model performance at parity, governance and compliance trump everything else. Anthropic’s Claude offered:

- Superior enterprise integrations

- Better compliance and data privacy

- Governance controls for regulated industries

- Reliability in production environments

- Better performance on structured reasoning and code

Meanwhile, OpenAI was perceived as chasing consumer viral moments rather than enterprise reliability.

Sam Altman’s Meltdown Moment

This double-whammy—losing the benchmark leaderboard and the enterprise revenue battle—triggered an extraordinary reaction.

In early December 2025, Sam Altman issued a rare “Code Red” internal alert.

“Code Red” in Silicon Valley doesn’t mean a minor course correction. It means emergency mobilization. It means:

- All non-core projects halted immediately

- Engineering teams reshuffled to focus exclusively on ChatGPT improvements

- GPT-5.2’s release accelerated from late December to December 9 (a two-week compression)

- Timeline pressures, testing shortcuts, quality-at-scale all subordinated to speed

Altman was essentially admitting: We’re losing. We need to fight. Now.

GPT-5.2—The Counterpunch

What’s Actually Different?

GPT-5.2, released December 12, 2025, isn’t a transformational leap like GPT-4 to GPT-5 was. It’s a refinement war—improvements in areas where Gemini 3 had gained ground.

Reasoning Accuracy

- Tighter logical chains with more structured reasoning

- Better multi-step problem solving

- Improved ability to sustain complex logic over extended conversations

Hallucination Reduction

- Dramatically fewer fabricated facts

- More careful attribution of information sources

- Improved differentiation between certain and uncertain knowledge

Professional-Grade Reliability

- Consistent performance over long conversations

- Better error handling and acknowledgment of uncertainty

- Enterprise-ready stability

Specific Benchmark Victories

Where GPT-5.2 wins:

- AIME 2025 (mathematics): 100% vs Gemini 3’s 95%—a symbolic victory in the domain where AI reasoning is most rigorously tested wired

- SWE-Bench Pro (software engineering): 55-56% success rate on rigorous real-world coding tasks

- Knowledge benchmarks: 70% expert-level accuracy across 40+ professional occupations

- Speed: 10x faster than human experts at similar accuracy

- Cost: Less than 1% of human expert cost for equivalent output

For specific domains (math, reasoning, multi-file software projects), GPT-5.2 edges out Gemini 3’s “Deep Think” mode.

But here’s the honest assessment: At this level of competition, the differences are marginal. Both are phenomenally capable. The leaderboard rankings fluctuate based on which specific tasks are weighted in human evaluation.

The real difference isn’t technical—it’s positioning.

What This Reveals About OpenAI’s Weakness

GPT-5.2 is technically solid but not dominant. That’s the problem.

OpenAI’s founder Satya Nadella might privately admit what’s happening: OpenAI is losing on product-market fit, not capability.

Enterprises prefer Claude because it:

- Integrates better with existing enterprise systems

- Offers better compliance certifications

- Provides superior contract terms and support

- Works better in regulated industries

Benchmarks don’t measure these factors. Yet they’re what determine actual revenue.

GPT-5.2 will likely reclaim some leaderboard positions. But it won’t fix OpenAI’s deeper problem: the market is fragmenting, and capability alone doesn’t win anymore.

The Bigger Picture—Why This Matters for India

The Three-Player Endgame

Before 2025, people talked about “AI companies” as if OpenAI was the obvious leader. That narrative has shattered.

Today, the global AI landscape is dominated by three serious players:

- OpenAI: Still $80B+ valuation, still dominant in consumer AI (ChatGPT has 100+ million users), but losing enterprise and benchmark races

- Google: Massive distribution advantage through Workspace, Search, Android, and YouTube; Gemini 3 leadership across benchmarks; billions in revenue from advertising-enhanced AI services

- Anthropic: Smallest by headcount, but now leading enterprise AI adoption; $18B+ valuation; Claude commanding 32% enterprise market share; growing faster than either competitor

Meta (with Llama’s open-source strategy) and Chinese competitors (DeepSeek, others) are secondary players but growing.

For India, this matters because India’s enterprises depend on choosing the right AI partner—and the choice is no longer obvious.

India’s Specific Vulnerability

India has 47% of enterprises running GenAI in production, marking rapid progress. But this adoption is built on borrowed AI—Google, OpenAI, and Anthropic models.

This creates strategic risk:

- API Dependency: If OpenAI’s API pricing spikes (it can), Indian companies have limited alternatives without reworking systems

- Data Sovereignty: Indian enterprise data flows through US servers, raising security and regulatory concerns

- Regulatory Exposure: If US export controls tighten on advanced AI (plausible under future administrations), Indian enterprises lose access

- Technology Colonialism: India outsources its most critical digital intelligence infrastructure to foreign companies

The India AI Governance Guidelines (November 2025) and UNESCO’s AI Ethics Framework are steps forward, but they don’t solve the core problem: India lacks indigenous, competitive AI models.

The Enterprise-Workforce Maturity Gap—India’s Danger Zone

The Paradox: Workers Ahead, Organizations Behind

India’s AI transformation reveals a dangerous paradox:

Workforce Adoption: 60% of Indian tech professionals report 25-50% productivity gains from GenAI. India’s workforce is self-educating, experimenting, and advancing at pace.

Enterprise Maturity: Yet only 1 in 3 receive structured employer training. Most learning happens through YouTube, online communities, and self-experimentation.

This creates a skills-governance mismatch:

Workers know ChatGPT better than their organizations’ formal policies allow. They’re using generative AI for tasks that might violate data privacy, information security, or compliance requirements—not out of malice, but because their organizations haven’t established clear guardrails.

The Numbers Behind the Gap

EY-CII’s latest India AI report (November 2025) reveals:

- 47% of enterprises have multiple GenAI use cases live in production

- 23% in pilot stage

- 76% of leaders believe GenAI will have major business impact

- 63% believe their organizations are ready

But then:

- Over 95% allocate less than 20% of IT budgets to AI—cautious, measured investment

- Governance frameworks lagging behind deployment

- Data quality and readiness gaps in most organizations

- Skills training deficits—most employees self-teaching vs. employer-supported programs

This is the classic high-risk profile: organizations moving fast without organizational readiness. This invites data breaches, compliance violations, and operational failures when GenAI systems hallucinate or produce biased outputs in real-world applications.

India’s Formalization Opportunity

Unlike developed economies that adopted AI ad hoc, India has a chance to build governance-first AI adoption. But the window is closing.

The next 12-18 months are critical for India to:

- Mandate AI governance frameworks before enterprises scale further

- Establish data readiness standards for AI systems in sensitive sectors (healthcare, finance, legal, government)

- Create structured training pathways rather than leaving 67% of workers to self-learn

- Build indigenous alternatives to foreign AI platforms

Multi-Dimensional Policy Challenge

Economic Competitiveness

The Productivity Paradox

AI promises to boost India’s productivity by 2.61% by 2030 in the organized sector (affecting 38 million employees) and an additional 2.82% in the unorganized sector—potentially adding $500-600 billion to GDP by 2035.

But this potential is only realized if:

- Enterprises modernize workflows (not just bolt-on AI)

- Workers receive structured reskilling (not self-learning)

- Data infrastructure is modernized (most organizations still use legacy systems)

- Indigenous AI capabilities reduce dependency on foreign platforms

Without these, India captures only 50% of the potential gain.

Enterprise Concentration Risk

Anthropic and OpenAI dominating enterprise AI means India’s digital economy depends on US company decisions: pricing, API availability, feature updates, terms of service.

This is a structural vulnerability if bilateral tensions rise or sanctions are imposed.

Governance and Regulation

The Transparency-Innovation Trade-off

The “black box” problem is acute in India. AI systems making welfare allocation decisions, loan approvals, or legal recommendations operate opaquely—beneficiaries don’t know why they were denied.

UNESCO’s 2021 AI Ethics Framework mandates transparency and accountability—but India’s current regulatory framework (Information Technology Act 2000) is decades old and inadequate.

India needs:

- Mandatory algorithm audits for high-risk systems

- Explainability requirements (AI must explain its reasoning)

- Human-in-the-loop mandates for critical decisions

- Grievance redressal mechanisms for those harmed by AI

The Delhi Police AI Case Study: Delhi Police’s AI tools include manual verification layers before final action—acknowledging that AI alone shouldn’t decide freedom/incarceration. This is the right governance model, but most organizations haven’t adopted it.

Ethics and Rights

Fairness and Algorithmic Bias

AI systems amplify existing biases in training data. If trained on English-language data, they perform worse for Indian languages. If trained on wealthier population data, they make biased decisions about loan eligibility or welfare distribution.

Accountability Vacuum

When an AI trained by OpenAI operating in India makes a harmful decision, who is responsible?

- OpenAI (for insufficient safeguards)

- The enterprise deploying it (for inadequate oversight)

- The Indian government (for inadequate regulation)

Current law is silent. This vacuum invites both unaccountability and over-caution (organizations avoiding AI use entirely).

Policy Recommendations for India

Immediate Actions (2025-2026)

1. AI Safety and Governance Authority

Establish an AI Safety Board under NITI Aayog or MeitY with power to:

- Set performance benchmarks and audit standards

- Mandate algorithmic impact assessments for high-risk AI

- Establish grievance redressal for AI-caused harms

- Coordinate with state governments on sectoral regulation

2. Enterprise Readiness Program

Government-funded initiative for:

- Mandatory AI governance frameworks

- Data quality and readiness standards

- Structured training for 10 million IT professionals

- SME-focused AI toolkits and templates

3. Regulatory Framework Updates

Modernize Information Technology Act with:

- AI-specific provisions (transparency, bias auditing, human oversight requirements)

- Sectoral guidelines (healthcare, finance, education, criminal justice)

- Liability standards (who is responsible for harmful AI decisions)

- International harmonization (alignment with EU AI Act, UNESCO standards)

Medium-Term Reforms (2026-2028)

1. Indigenous AI Development

- National Large Language Model (LLM) for critical government and research use

- Domain-specific models for Indian languages, legal systems, healthcare, agriculture

- Public-private R&D consortiums with major AI labs

- Compute infrastructure expansion (national AI cloud, supercomputing)

2. Skill Development at Scale

- National AI Literacy Mission targeting diverse demographics (rural, women, minorities)

- Reskilling for workers in displacement-vulnerable sectors (data entry, basic coding, content writing)

- University curriculum integration across STEM and social sciences

- Industry-academia partnerships for applied AI education

3. Data Sovereignty Strategy

- Build national trusted data commons for AI training

- Incentivize local data infrastructure vs. cloud-dependent models

- Establish data governance standards protecting privacy and sovereignty

Long-Term Vision (2028-2035)

1. Inclusive AI Development

AI for social welfare delivery, healthcare, agriculture, education—not just corporate profits.

2. Global Leadership

Position India as:

- Thought leader in responsible AI governance for developing nations

- Mediator between US and China on AI standards

- Exporter of ethical AI solutions and frameworks

3. Human-Centric Society

Reimagine education, work, and social contracts for an AI-augmented economy—ensuring humans remain at the center of decisions affecting their lives.

Conclusion: India’s AI Moment—Choose Autonomy or Dependency

OpenAI’s desperate “Code Red” and GPT-5.2 launch reveal a brutal truth about the AI age: dominance is temporary, competition is relentless, and yesterday’s leader is today’s cautionary tale.

For India, this should trigger urgent strategic reckoning.

India has a 12-month window to:

- Build indigenous AI capabilities before foreign models entrench further

- Establish governance frameworks before enterprises scale unsustainably

- Invest in workforce reskilling before displacement hits vulnerable sectors

- Assert data sovereignty before digital colonialism becomes irreversible

The alternative is a familiar pattern: India becomes a consumer of AI built by others, dependent on foreign pricing, subject to foreign regulation, and unable to shape the technology that increasingly shapes Indian society.

That’s not acceptable for a nation of 1.4 billion people with ambitions to be a developed economy by 2047.

This issue exemplifies 21st-century governance complexity: technology outpacing policy, global competition demanding national strategy, economic opportunity entangled with ethical risk, innovation requiring responsible guardrails.

Key Terms Glossary

| Term | Definition |

|---|---|

| Code Red | Internal emergency alert halting non-core activities; deployment of all resources to critical problem |

| GPT-5.2 | OpenAI’s latest language model focusing on reasoning accuracy, hallucination reduction, and professional reliability |

| Gemini 3 | Google’s latest AI model that topped LM Arena leaderboard and gained market share; includes Deep Think reasoning mode |

| LM Arena | Influential leaderboard ranking AI models based on human preference voting (Elo rating system) |

| Claude Opus 4.5 | Anthropic’s leading enterprise AI model; now holds 32% enterprise market share |

| Hallucination | AI generating false or fabricated information presented as fact |

| Enterprise Maturity | Organizational readiness to effectively govern, deploy, and benefit from AI systems |

| Algorithmic Transparency | Requirement that AI systems explain their reasoning and decisions in understandable terms |

| Human-in-the-Loop | System design requiring human oversight and final decision authority (not full AI autonomy) |

| Regulatory Sandbox | Controlled environment allowing AI companies to test systems under relaxed rules with oversight |

| Data Sovereignty | A nation’s control over its own data and digital infrastructure (vs. foreign dependency) |

| SWE-Bench Pro | Rigorous software engineering benchmark testing real-world coding capabilities |

| AIME 2025 | Advanced math competition; GPT-5.2 achieved 100%, used as benchmark for AI reasoning ability |

UPSC Practice Questions

Mains Questions (250 words each)

Q1: Technology Competition and National Strategy

“The global AI arms race exemplified by OpenAI’s ‘Code Red’ and GPT-5.2 launch reveals that capability alone doesn’t guarantee market dominance; governance, enterprise integration, and strategic partnerships matter equally.” Analyze this statement with reference to the competitive dynamics between OpenAI, Google, and Anthropic, and discuss implications for India’s AI policy. (GS-II/III, 250 words)

Q2: Enterprise Maturity Gap

India’s workforce is adopting AI faster than organizations can govern it responsibly. This creates risks of data breaches, compliance violations, and biased decision-making. Examine policy measures needed to bridge this gap while enabling innovation. (GS-III, 250 words)

Q3: Economic Competitiveness vs. Data Sovereignty

“Relying exclusively on foreign AI platforms (OpenAI, Google, Anthropic) for critical business functions poses strategic vulnerability for India’s digital economy.” Discuss this statement with reference to the economics of AI services, regulatory risks, and the case for indigenous AI capabilities. (GS-II/III, 250 words)

Q4: Ethical AI Governance

Compare UNESCO’s 2021 Recommendation on AI Ethics with India’s current regulatory framework. What legislative and institutional reforms are necessary for India to ensure transparency, accountability, and fairness in AI deployment across governance, healthcare, and finance sectors? (GS-II, 250 words)

150-Word Quick Answer Questions

Q5: Explain Sam Altman’s “Code Red” declaration. What does it reveal about the competitive dynamics in the AI industry?

Q6: How did Anthropic’s Claude overtake OpenAI in enterprise market share despite lower consumer awareness?

Q7: What is the enterprise-workforce maturity gap in India’s AI adoption, and why does it pose governance risks?

Q8: Discuss the concept of “algorithmic transparency” and its relevance to AI deployment in Indian governance.

Ethics Case Study

An Indian insurance company deploys GPT-5.2 to assess health insurance claims and make coverage decisions. The AI achieves 95% accuracy in claim decisions and processes 10x faster than human claims officers. However:

- The AI training data is primarily from US health systems, with limited representation of Indian medical practices and disease patterns

- Beneficiaries denied coverage receive a system-generated explanation but cannot understand the AI’s reasoning

- The company hasn’t conducted fairness audits; initial data suggests the system denies more claims for rural populations

- Only senior management received AI training; claims officers (who implement decisions) received none

- Grievance redressal for AI decisions is unclear

Questions:

- What ethical principles are violated in this scenario?

- How should the company redesign the deployment to balance efficiency with fairness and accountability?

- What regulatory guardrails should India mandate for AI in insurance and other financial services?

- What’s the role of human oversight and explainability in this context?

+ There are no comments

Add yours