Key Highlights:

- India’s generative AI startups raised record $524 million in 2025, marking highest investment surge

- Celebrity victories in deepfake cases set legal precedents for personality rights protection nationwide

- CERT-In’s high-severity advisory reveals deepfakes cost companies millions through sophisticated fraud schemes

- Digital literacy programs reach millions while detection technologies struggle against evolving AI capabilities

- Draft Digital India Act proposes comprehensive framework to balance innovation with security concerns

The Digital Doppelganger Dilemma

India stands at the crossroads of an unprecedented technological revolution where artificial intelligence’s creative power collides with its potential for deception. Digital impersonation through deepfakes and generative AI has evolved from science fiction curiosity to a clear and present danger threatening the fabric of democracy, commerce, and personal dignity.

Deepfakes – synthetic media created using deep learning algorithms – represent a quantum leap in AI’s ability to fabricate reality. These AI-generated videos, images, and audio recordings can convincingly portray individuals saying or doing things they never did, creating what experts call “synthetic media” with alarming authenticity. ascionline

The technology matters today because 75% of Indians viewed deepfake content in 2024, with global deepfake incidents rising in triple digits. For policymakers, administrators, and citizens, this represents both an innovation opportunity and a governance nightmare requiring immediate attention.

Deepfakes and Digital Impersonation: The Mechanics of Deception

How Deepfakes Work

The technology behind deepfakes primarily relies on Generative Adversarial Networks (GANs), where two neural networks – a generator and discriminator – work in tandem to create increasingly realistic synthetic content. Voice cloning technologies can now replicate speech patterns from minimal audio samples, while facial manipulation algorithms can seamlessly replace faces in video content.

Modern deepfake creation involves:

- Data harvesting from social media profiles and public content

- Training AI models on thousands of images and audio samples

- Synthesis algorithms that blend real and artificial elements

- Post-processing techniques that eliminate detection markers

Real-World Cases from India

Political Manipulation During Elections

The 2024 Lok Sabha elections witnessed unprecedented deepfake deployment for political manipulation. Fake audio clips and manipulated videos of political leaders spread rapidly across social media platforms, potentially influencing voter perceptions and democratic processes.

Recent incidents included the BJP posting four fake audio clips targeting NCP (SP)’s Supriya Sule on the eve of Maharashtra assembly elections, prompting CERT-In to issue a high-severity advisory.

Banking Frauds and Identity Theft

Kerala reported India’s first sophisticated deepfake fraud case involving a 73-year-old victim who lost ₹40,000 to scammers using AI-generated voice technology to impersonate his former colleague. The “digital arrest” scam phenomenon has emerged, where criminals use deepfaked authority figures to extort money from victims.

Corporate targets include major incidents like the Arup Engineering case in Hong Kong, where employees transferred $25 million after participating in a video conference featuring entirely AI-generated participants impersonating company executives.

Celebrity and Common Citizen Targeting

High-profile cases include deepfake videos of actresses Rashmika Mandanna and Katrina Kaif, leading to swift police action under multiple legal provisions. The McAfee 2024 survey revealed that 45% of Indian consumers or someone they know fell victim to deepfake shopping scams.

Legal and Regulatory Landscape in India

Current Legal Framework

Information Technology Act, 2000 – Key Provisions

India’s primary cyber law offers several relevant sections for addressing deepfakes:

- Section 66C (Identity Theft): Punishes fraudulent use of another person’s identity electronically, with penalties up to 3 years imprisonment and ₹1 lakh fine

- Section 66D (Impersonation): Addresses cheating through impersonation via computer resources, carrying similar penalties

- Section 66E (Privacy Violation): Covers unauthorized capture and transmission of private area images, punishable by 3 years imprisonment or ₹2 lakh fine

- Sections 67, 67A, 67B: Target obscene and sexually explicit content transmission

IT Rules 2021 – Platform Accountability

The Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules 2021 mandate specific obligations:

- Rule 3(1)(b) prohibits eleven types of content including deepfakes and impersonation

- 36-hour removal timeline for reported deepfake content upon complaints

- 24-hour takedown requirement for sexually explicit deepfakes and impersonation content

- Intermediaries must inform users about prohibited content and legal consequences

Bharatiya Nyaya Sanhita, 2023

Section 353 penalizes spreading misinformation and disinformation, while Section 356 addresses defamation through “visible representation” including deepfakes.

Landmark Judicial Interventions

Anil Kapoor vs. Simply Life India (2023)

This watershed case established crucial precedents for personality rights protection. The Delhi High Court granted comprehensive injunctions against:

- AI deepfake creation using Kapoor’s likeness

- Commercial exploitation of his signature phrase “Jhakaas”

- Merchandise sales featuring his unauthorized image

- Digital manipulation across all platforms

The court recognized celebrities’ right of endorsement as a major livelihood source, making unauthorized AI impersonation legally actionable.

Asha Bhosle Voice Cloning Victory (2025)

The Bombay High Court protected legendary singer Asha Bhosle’s personality rights against AI voice cloning and image misuse, setting precedent for digital identity protection. The court noted how celebrities are “susceptible to being targeted by unauthorised generative AI content”.

Recent Celebrity Protection Wave

Aishwarya Rai Bachchan and Abhishek Bachchan secured Delhi High Court protection against AI-generated misuse in September 2025. Filmmaker Karan Johar also obtained similar relief against deepfake manipulation.

Jackie Shroff’s Personality Rights (2024) – The Delhi High Court noted that unauthorized use “dilutes the brand equity painstakingly built by the plaintiff over the years”.

Emerging Regulatory Framework

Government Advisories and Guidelines

MeitY issued three critical advisories addressing deepfakes:

- November 2023: Required intermediaries to identify and remove deepfakes within specified timelines

- December 2023: Mandated user education about prohibited content and legal consequences

- March 2024: Introduced watermarking requirements for deepfake content

CERT-In’s Role

The Indian Computer Emergency Response Team issued a high-severity advisory in November 2024, highlighting deepfakes’ use in financial fraud, disinformation, and social engineering attacks. CERT-In is actively testing anti-deepfake detection technology to combat AI-driven scams.

Draft Digital India Act

The proposed legislation aims to replace the IT Act 2000 with comprehensive AI governance provisions, including:

- Specific deepfake definitions and prohibitions

- Enhanced platform liability frameworks

- Mandatory AI risk assessment requirements

- Cross-border enforcement mechanisms

Commercial Exploitation of Generative AI in India

Market Growth and Investment Trends

India’s generative AI startup ecosystem has witnessed explosive growth, with the startup base expanding 3.6X from H1 2023 to H1 2024. Indian GenAI companies raised $524 million in the first seven months of 2025, marking a five-year high and representing four times the $129 million raised in 2021. community.nasscom

Key investment patterns include:

- 71% of net new investments flowing to native model development hkiofa

- 17+ Indic and vertical models launched within a year

- 130+ GenAI assistants operational, with 4X growth in count

- 74% domestic PE/VC participation in the investor mix

Industry-Specific Applications

Healthcare Innovation

Startups like Dozee and Prudent AI are developing domain-specific platforms for medical diagnostics and patient monitoring using generative AI. These applications include synthetic medical imaging, drug discovery acceleration, and personalized treatment recommendations.

Banking and Financial Services

Financial institutions deploy generative AI for:

- Automated customer service through AI avatars

- Fraud detection using synthetic data patterns

- Risk assessment modeling with AI-generated scenarios

- Regulatory compliance through automated reporting

Creative Arts and Media

The entertainment industry leverages generative AI for:

- Automated dubbing and voice synthesis for multilingual content

- Virtual avatars for deceased or unavailable actors

- Synthetic media creation for cost-effective production

- Content localization across India’s diverse linguistic landscape

Enterprise Automation

Leading investments target enterprise software companies like Fractal Analytics, AtomicWork, and TrueFoundry, focusing on AI tools that automate non-core business functions.

Commercial Risks and Challenges

Identity Misuse and Brand Dilution

Unauthorized commercial exploitation of personalities has led to numerous legal battles, with celebrities seeking protection against merchandise sales using their AI-generated likenesses.

Copyright Violations

Generative AI training on copyrighted material without authorization creates legal grey areas, with Section 52 of the Copyright Act not explicitly covering deepfake exceptions.

Reputational Harm

Deepfake-based scams targeting corporate executives have resulted in millions in losses, with the Arup Engineering case demonstrating vulnerabilities in business communications.

National Security Implications

Democratic Process Threats

Political deepfakes during elections pose existential threats to democratic integrity. The Election Commission expressed serious concerns about deepfake AI’s potential to spread false narratives and manipulate voter behavior.

Information Warfare

Cross-border cyber activities, including Pakistan-linked groups using deepfakes during conflicts like the Pahalgam attack, demonstrate deepfakes’ role in modern information warfare.

Social Cohesion Risks

Deepfakes targeting religious figures and community leaders can trigger communal tensions and undermine social harmony.

Economic Impact Assessment

Financial Fraud Escalation

CERT-In’s advisory reveals deepfakes enable sophisticated financial crimes:

- Average corporate loss per incident: $500,000

- Large enterprise losses: $600,000+

- Projected global losses by 2027: $40 billion

Market Confidence Erosion

Synthetic media manipulation can trigger stock market volatility and undermine investor confidence in digital communications.

Gender-Specific Vulnerabilities

Women as Primary Targets

Research indicates disproportionate targeting of women through deepfake pornography and revenge content. Non-consensual intimate deepfakes cause severe psychological trauma and social stigmatization.

Legal Protection Gaps

Despite personality rights evolution, enforcement mechanisms remain inadequate for protecting women from deepfake harassment.

Detection Technologies and Digital Literacy

Forensic Advances and Limitations

Technical Detection Methods

Modern deepfake detection employs multiple approaches:

- Convolutional Neural Networks (CNNs) for spatial inconsistency detection

- Recurrent Neural Networks (RNNs) for temporal pattern analysis

- Transformer architectures like BERT for text-based detection

- Hybrid frameworks combining multiple detection modalities

Detection Tool Ecosystem

Available detection tools include:

- Hive Moderation, Logically, Originality.ai for content verification

- Deepfake-o-Meter for academic research and analysis

- Hiya for voice deepfake detection

- Sentinel, Sensity, Fake-catcher for real-time monitoring

Technical Limitations

Current detection faces significant challenges:

- False positive rates in low-quality media analysis

- Sophistication arms race between generation and detection

- Limited effectiveness against compressed social media content

- Training dataset biases affecting accuracy across demographics

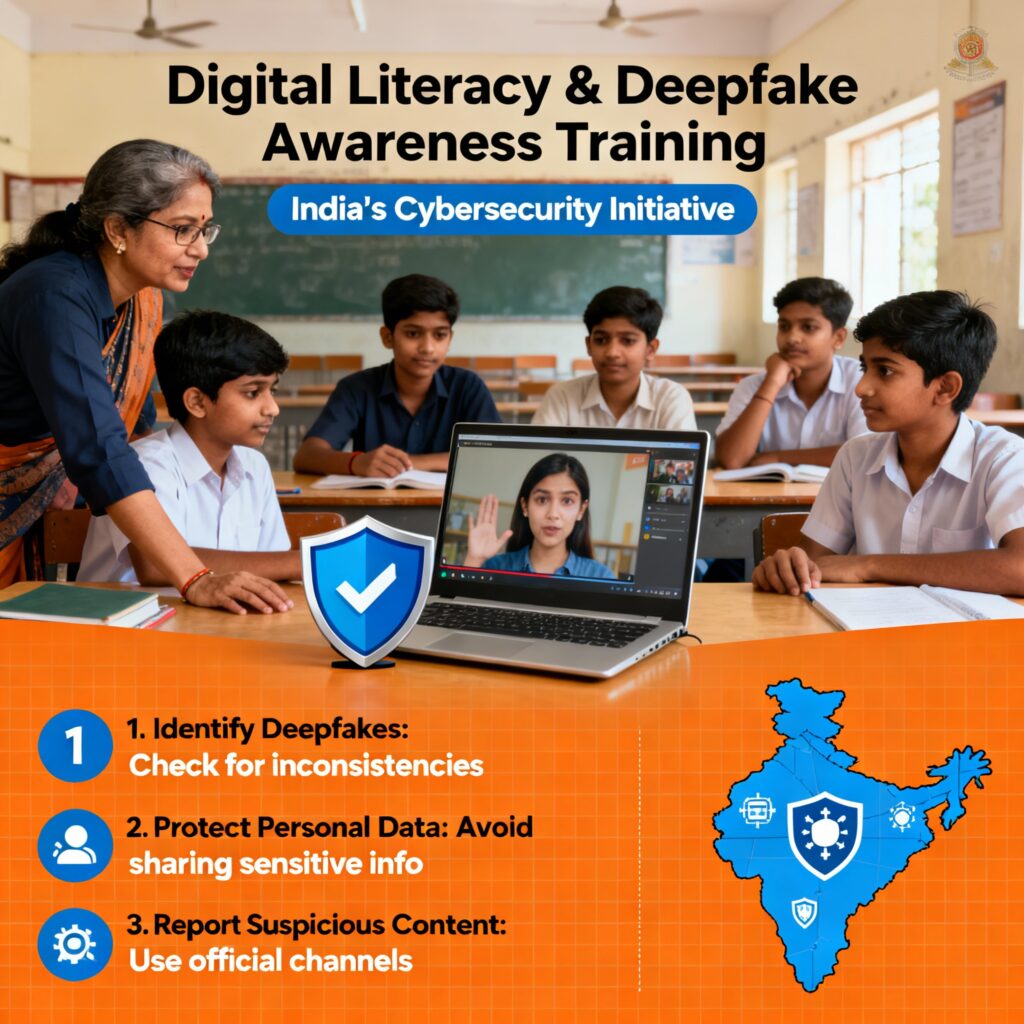

Digital Literacy Initiatives

Government Programs

National digital literacy efforts include:

- Information Security Education and Awareness (ISEA) programs

- State-level workshops for law enforcement agencies

- “Satyameva Jayate” program in Kerala focusing on responsible digital engagement

Civil Society Contributions

Organizations advancing digital literacy:

- Digital Empowerment Foundation (DEF) develops contextual toolkits for rural communities

- Ideosync Media Combine’s “Bytewise Factcheck Fellowship” targets school students aged 13-17

- Shakti Coalition collaborative fact-checking during elections

Private Sector Involvement

Technology companies contribute through:

- Google’s partnership with Election Commission for verified information dissemination

- Social media platform fact-checking collaborations

- Industry stakeholder consultations with government agencies

Global Best Practices and Policy Recommendations

International Regulatory Approaches

United States Model

Several US states have implemented specific deepfake legislation with criminal penalties for malicious use, while maintaining First Amendment protections for legitimate applications.

European Union Framework

The EU AI Act 2024 defines deepfakes as “AI-generated or manipulated content resembling existing persons, falsely appearing authentic”. The Digital Services Act mandates platform liability for synthetic media removal.

Denmark’s Copyright Innovation

Denmark’s proposed legislation allowing citizens to copyright their biometric characteristics represents a novel approach to personality protection in the AI era.

India’s Strategic Policy Options

Dedicated Legal Framework

Experts recommend establishing AI-specific legislation rather than relying on patchwork regulations:

- Clear deepfake definitions with illustrative examples

- Graduated penalties based on intent and harm caused

- Safe harbors for legitimate uses including parody and education

Enhanced Detection Infrastructure

- National AI forensics capabilities development

- Mandatory digital watermarking for AI-generated content

- Real-time detection systems integrated with social media platforms

Cross-Border Cooperation

- International collaboration through Interpol and bilateral treaties

- Technology sharing agreements for detection tools and techniques

- Harmonized legal standards for cross-jurisdictional enforcement

Policy Recommendations for India’s AI Governance

Immediate Regulatory Priorities

Comprehensive AI Legislation

India requires dedicated AI governance legislation that:

- Defines deepfakes with technical precision and legal clarity

- Establishes graduated penalties reflecting harm severity and intent

- Provides clear exemptions for legitimate uses including journalism, education, and artistic expression

- Creates specialized enforcement mechanisms with technical expertise

Strengthened Platform Accountability

Enhanced intermediary obligations should include:

- Proactive deepfake detection using AI-powered monitoring systems

- Transparent reporting mechanisms for synthetic media identification

- User verification systems for content authenticity

- Rapid response protocols for harmful content removal

Personality Rights Codification

Legislative recognition of personality rights through:

- Statutory protection for individual likeness, voice, and biometric characteristics

- Commercial licensing frameworks for authorized AI use

- Inheritance provisions for posthumous personality rights protection

- Collective management systems for rights administration

Long-term Strategic Framework

Digital Literacy as National Priority

Comprehensive education initiatives requiring:

- Curriculum integration from primary through higher education levels

- Public awareness campaigns leveraging traditional and digital media

- Professional development for educators, journalists, and law enforcement

- Community-based programs adapted to local linguistic and cultural contexts

Innovation-Security Balance

Policy frameworks promoting:

- Regulatory sandboxes for ethical AI experimentation

- Industry self-regulation through professional standards and codes

- Public-private partnerships for detection technology development

- Research and development incentives for beneficial AI applications

International Leadership

India’s global AI governance role through:

- Multilateral cooperation initiatives in forums like G20 and UN

- Technology diplomacy promoting responsible AI development standards

- Capacity building assistance for developing nations facing similar challenges

- Best practice sharing from India’s diverse linguistic and demographic experience

Conclusion: Navigating India’s Digital Transformation

India stands at a critical juncture where the transformative potential of generative AI intersects with unprecedented risks to democratic governance, individual privacy, and social cohesion. The evidence is clear: deepfakes and AI-generated synthetic media represent both tremendous opportunity and existential threat to India’s digital future.

The surge in generative AI investment reaching $524 million in 2025 demonstrates market confidence in India’s technological capabilities. Simultaneously, celebrity legal victories and judicial recognition of personality rights indicate evolving legal frameworks capable of addressing AI-era challenges.

However, the scale of the challenge demands comprehensive, coordinated response across government, industry, and civil society. CERT-In’s high-severity advisories and the government’s multi-stakeholder consultations represent positive steps, but dedicated legislation remains essential.

For UPSC aspirants and governance professionals, understanding these dynamics is crucial. The intersection of technology policy, legal innovation, and democratic protection represents core challenges for India’s administrative leadership. The ability to balance innovation promotion with security protection will define India’s success in the global AI economy.

The path forward requires evidence-based policymaking, stakeholder collaboration, and adaptive governance mechanisms capable of evolving with rapidly advancing technology. India’s response to the deepfake challenge will serve as a model for emerging economies worldwide, making this both a national imperative and global responsibility.

+ There are no comments

Add yours